Table Of Content

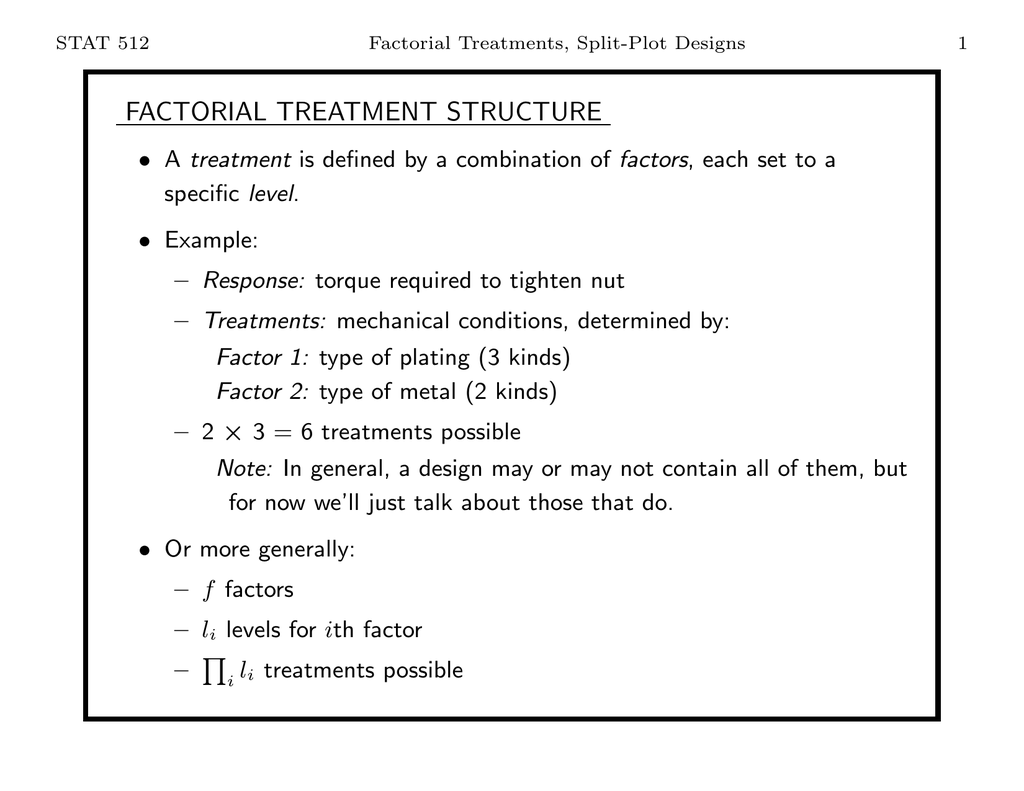

However, other combinations of independent variables are not independent from one another and they produce interactions. Remember, independent variables are always manipulated independently from the measured variable (see margin note), but they are not necessarilly independent from each other. Chakraborty et al., (Chakraborty et al., 2009) noted that factorial designs may not perform optimally for intervention selection in cases where there are weak main effects, but relatively strong interaction effects. Unfortunately, this situation may be a fairly common occurrence in factorial experiments of clinical interventions (e.g., Cook et al., 2016; Piper et al., 2016; Schlam et al., 2016). Investigators may also wish to include measures in their factorial experiments that assess potential alternative explanations for their findings.

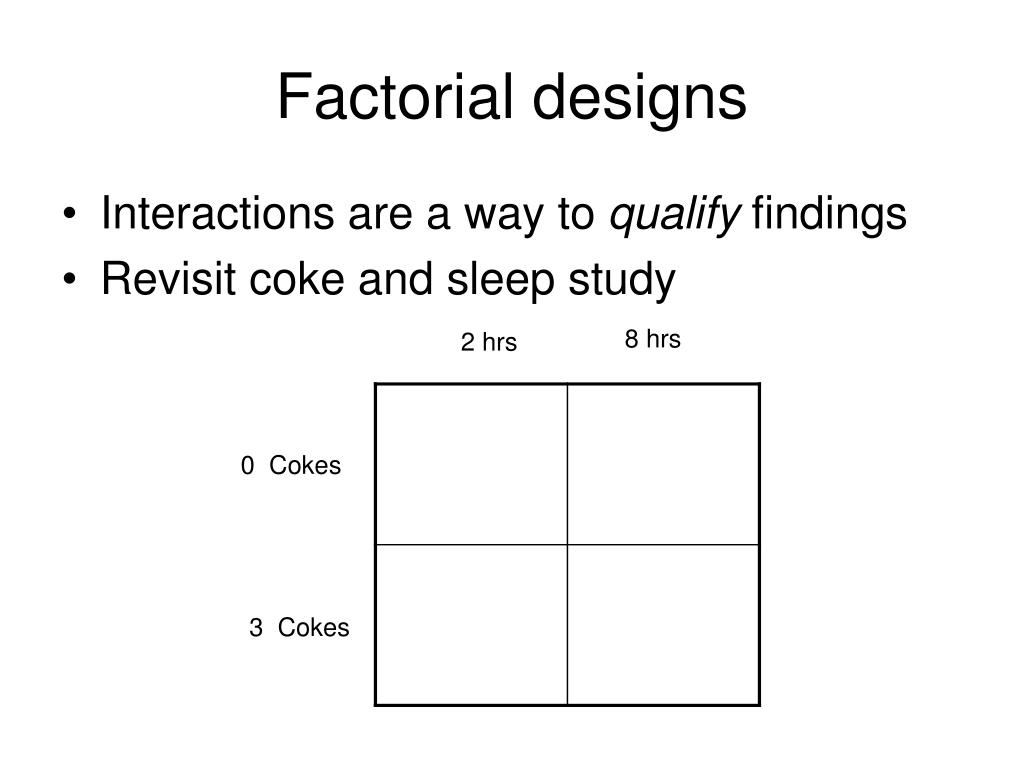

Visualizing Main Effects & Interaction Effects

The experiment can be replicated, or the sparsity-of-effects principle can often be exploited. Replication is more common for small experiments and is a very reliable way of assessing experimental error. When the number of factors is large (typically more than about 5 factors, but this does vary by application), replication of the design can become operationally difficult. In these cases, it is common to only run a single replicate of the design, and to assume that factor interactions of more than a certain order (say, between three or more factors) are negligible. Under this assumption, estimates of such high order interactions are estimates of an exact zero, thus really an estimate of experimental error.

Checking Residuals Using Minitab's Four in One Plot

More formally, this means that the shoe and hat independent variables do not interact. It would mean that the effect of wearing a shoe on height would depend on wearing a hat. But in some other imaginary universe, it could mean, for example, that wearing a shoe adds 1 to your height when you do not wear a hat, but adds more than 1 inch (or less than 1 inch) when you do wear a hat. This thought experiment will be our entry point into discussing interactions. A take-home message before we begin is that some independent variables (like shoes and hats) do not interact; however, there are many other independent variables that do.

Validation of the Taguchi method on the example of evaluation of willow biomass production factors under ... - ScienceDirect.com

Validation of the Taguchi method on the example of evaluation of willow biomass production factors under ....

Posted: Sat, 01 Oct 2022 07:00:00 GMT [source]

Minitab®

Assessing the potentialities of an easy-to-use sample treatment strategy: multivariate investigation on “Moka extraction ... - ScienceDirect.com

Assessing the potentialities of an easy-to-use sample treatment strategy: multivariate investigation on “Moka extraction ....

Posted: Mon, 11 Mar 2024 09:22:27 GMT [source]

In this example B, C and D were the three main effects and there were two interactions BD and BC. From Minitab we can reproduce the normal probability plot for the full model. The first is to take a higher order interaction out of the model and use them as the estimate of error. Next, what we did at the end of the process is drop that factor entirely. If a particular factor in the screening experiment turns out to be not important either as a main effect or as part of any interaction we can remove it.

First, does the effect of being tired depend on the levels of the time since last meal? Look first at the effect of being tired only for the “1 hour condition”. We see the red bar (tired) is 1 unit lower than the green bar (not tired). So, there is an effect of 1 unit of being tired in the 1 hour condition.

How is this related to Factorial Designs?

We have already seen that factorial experiments can include manipulated independent variables or a combination of manipulated and non-manipulated independent variables. But factorial designs can also consist exclusively of non-manipulated independent variables, in which case they are no longer experiments but correlational studies. Consider a hypothetical study in which a researcher measures two variables. First, the researcher measures participants’ mood and self-esteem.

This can be conceptualized as a 2 × 2 factorial design with mood (positive vs. negative) and self-esteem (high vs. low) as non-manipulated between-subjects factors. But factorial designs can also include only non-manipulated independent variables, in which case they are no longer experiments but are instead non-experimental (cross-sectional) in nature. Recall that in a simple between-subjects design, each participant is tested in only one condition. In a simple within-subjects design, each participant is tested in all conditions. In a factorial experiment, the decision to take the between-subjects or within-subjects approach must be made separately for each independent variable. In a between-subjects factorial design, all of the independent variables are manipulated between subjects.

& 4. Measure Performance to Find an Effect

It is tempting to take advantage of the efficiency of the factorial experiment and use it to evaluate many components since power is unrelated to the number of factors, and therefore, a single experiment can be used to screen many components. However, the number of factors used and the types and number of levels per factor can certainly affect staff burden. A 5-factor design with 2-levels/factor yields some 32 unique combinations of components (Table 1), and requires that at least five different active or “on” ICs be delivered. Moreover, if instead of “off” or no-treatment conditions, less intensive levels of components are used, then even more ICs must be delivered (albeit some of reduced intensity). Factorial design is an important method to determine the effects of multiple variables on a response. Traditionally, experiments are designed to determine the effect of ONE variable upon ONE response.

Achieving the Right Component Comparisons

However, in many cases, two factors may be interdependent, and it is impractical or false to attempt to analyze them in the traditional way. If you are willing to assume, and if it is true that there is no interaction, then you can use the interaction as your F-test denominator for testing the main effects. If it is not true then the MSab will tend to be larger than it should be, so the F-test is conservative.

One of the purposes of a factorial design is to be efficient about estimating and testing factors A and B in a single experiment. Sometimes, we are also interested in knowing whether the factors interact. In either case, the first test we should do is the test on the interaction effects. The interaction effects situation is the last outcome that can be detected using factorial design.

Now we are going to shift gears and look at factorial design in a quantitative approach in order to determine how much influence the factors in an experiment have on the outcome. Nature, he suggests, will best respond to "a logical and carefully thought out questionnaire". A factorial design allows the effect of several factors and even interactions between them to be determined with the same number of trials as are necessary to determine any one of the effects by itself with the same degree of accuracy. An interaction is a result in which the effects of one experimental manipulation depends upon the experimental manipulation of another independent variable. What we want to do next is look at the residuals vs. variables A, B, C, D in a reduced model with just the main effects as none of the interactions seemed important. Let's go back to the drill rate example (Ex6-3.MTW | Ex6-3.csv) where we saw the fanning effect in the plot of the residuals.

This is a matter of knowing something about the context for your experiment. When choosing the levels of your factors, we only have two options - low and high. You can pick your two levels low and high close together or you can pick them far apart. As most of you know from regression the further apart your two points are the less variance there is in the estimate of the slope. The variance of the slope of a regression line is inversely related the distance between the extreme points.

No comments:

Post a Comment